Author: Dmitry Mamontov

Viewers: 1,382

Last month viewers: 40

Categories: PHP Tutorials, PHP Performance

Maybe we need to buy a new server to handle the expected load, or maybe the customer wants to deploy in an existing server.

In any case, if after deploying and running the application it will show poor performance, then we need to ask the team what we can do to make the application faster or use a better server.

Therefore we need to determine if the application is performing well. Read this article to learn how to quickly determine the performance of an application on the current server.

Contents

Introduction

Typical Situations

Requirements for Making Measurements

The Measurement Target

Tools Measurements

How to Measure the Performance of a PostgreSQL server?

What About the Performance of PHP?

Conclusion

Introduction

Every developer wants to launch their Web applications and be ready to deal with eventual large amounts of traffic when it is successful.

The main challenge of server performance evaluation is that it needs to be done quickly, without the use of special (read complicated) tools and, of course, before the announcement of the application launch.

For this, we should be able to retrieve from the server some metrics and multiply the values by performance factors of well known applications, to estimate performance of the application on that server.

In reality, accomplishing this task, is by no means for every developer, and it is also not everyone that wants to do it.

In this article I want to talk about the techniques and tools that I use to evaluate the performance of a server.

Typical situations

1. Choosing the Hosting and Server

The team starts preparing to launch the application and will soon release the first version of the product.The next step is to deploy the application on server that we may need to buy and customize an existing one.

On the general project meeting, the project manager in charge asks this "simple" question: "So, who will choosing the hosting and server? I will provide the necessary amount of money of the project budget in the next step." Usually, wishing this is not a big problem.

Furthermore, trying to delegate, "Bob, can you please do this task?" does not work. Bob instantly finds and lists at least a dozen urgent and important tasks that right now he is on it and delivery is needed for yesterday.

Following the common sense of self-preservation, the team consistently tells the Project Manager, where he needs to look for such a specialist (not in the closer neighborhood), and he will choose exactly the ideal server configuration.

2. Is the Server Powerful Enough

According to the initial requirements for the server provided by the customer, it looks like a perfect situation at the start of the project, but it is not like that when it comes the launch of the project.

The customer asks "Is the server powerful?" The expected answer should be: "Yes!". After deploying, the project leader becomes sad when he looks at Web application response times. An unpleasant thought comes up, "Who is to blame?" and "What should I do?".

He realizes that the server configuration needed to be picked by himself, but on the other hand, a specialist has not been found in the region. In fact it turns out that this powerful server, a cheap VPS whose parameters looked good, shares the host resources with an army of hosting customers. The client paid the server for five years and is not going to change anything.

3. Advanced level: The Client has the Server and Has an Administrator

The server settings are satisfactory, but after deploying the application we see terrible brakes, lags and delays. Our development server is three times slower, but the application could be running eight times faster on a new server.

None of our proposals for the replacement or purchase of a new server is accepted since in the opinion of the administrator, it will brakes "your" application.

The client does not know who to believe. He also does not like the idea of having a new expense, so the administrator argument counts more.

The project manager requires that the team provides a clear explanation of "why the application slows down" and evidence numbers showing the server's "guilt". The team, as always, is full of free time, so all are happy to take up the solution of the problem and give a beer to the specialist from another department for the tip about "where to dig."

Choice of a server application and the load

Evaluation of existing server capacity

Be able to answer the question "Why is it so slow?"

To sum up, we need to face some situations and determine what tasks we need to be able to solve

Requirements for Making Measurements

The most accurate way to measure the performance of the server is also the most obvious: you need to install an application on the server and run an application that causes the actual load. Although such a process gives an accurate result, it is useless for several reasons:

- We want to know the server capacities in advance of the launch in production

- The method of measurement must be fast and cheap

- The measuring tool should be easy to use and install on the server

- The result of the measurement must be easy to interpret and compare

The Measurement Target

The server is purchased, the operating system is installed, and sshd daemon is running. Open the console and enter in the server. Black console, green letters and a flashing mute cursor is asking you: "What's next?" It's time to think about what will be measured and what constitutes performance.

Up to this point the question seemed simple: "To start any benchmark, and everything will be clear." Now, with a blinking cursor console, the thought came to a standstill and you are not able to enter the necessary commands.

What does Web application performance depends to a large extent:

- The speed of the CPU + RAM access

- The speed of the disk subsystem

- Performance of the application runtime language (in this case, PHP)

- Configuring the database (we have it MySQL or PostgreSQL)

- And, of course, on the application (on which resources it is using)

We need to have four tools that could measure the speed individually:

- For server components: CPU + RAM and disk subsystem

- For software components: MySQL and PHP

Having the results of the measurements, we can talk about a complex server performance as a whole, and can predict the load of Web applications.

Measurement Tools

What is sysbench?

I cannot do better describing this tool than its author, so I am quoting his description:

SysBench is a modular, cross-platform and multi-threaded benchmark tool for evaluating OS parameters that are important for a system running a database under intensive load.

The idea of this benchmark suite is to quickly get an impression about system performance without setting up complex database benchmarks or even without installing a database at all.

This is what you need. Sysbench allows you to quickly get an idea of the performance of the system without having to install complex benchmarks and tools.

Install sysbench

apt-get install sysbench

You can compile:

$ ./autogen.sh $ ./configure $ make

Check the CPU performance

To do this, we need to run a calculation of twenty thousand primes.

$ sysbench --test=cpu --cpu-max-prime=20000 run

By default the calculation will be performed in a single core. We use --num-threads = N key if we want to take advantage of parallel computing.

The result of the test:

Test execution summary: total time: 17.3915s total number of events: 10000 total time taken by event execution: 17.3875 per-request statistics: min: 1.66ms avg: 1.74ms max: 4.00ms approx. 95 percentile: 2.03ms

The interesting thing about this test is the value of total time Launching the test on multiple servers, we can compare the measures.

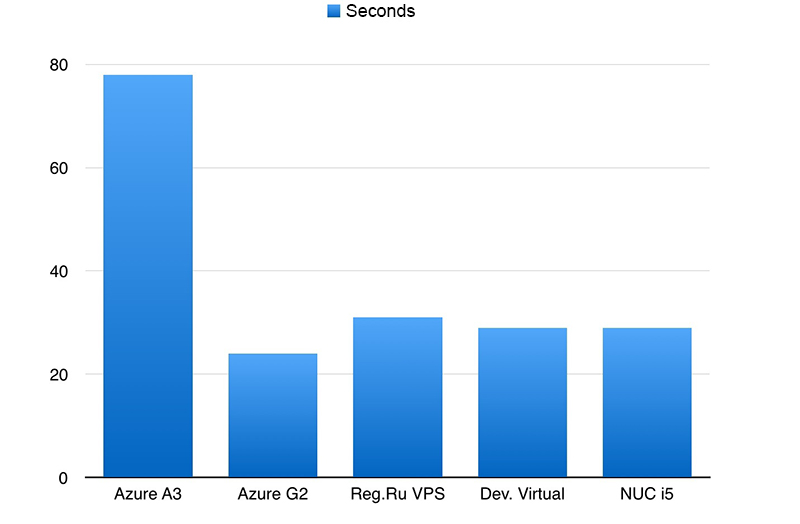

Here is an example of this test run on servers that were under my hand at the time of preparation of this article.

Notes:

- G2 is about three times faster than the A3

- Simple VPS'ka on Reg.Ru for $ 4 / month comparable G2 :)

- Dev-based Xeon X3440 worked as well as the NUC i5

- Surprisingly the same results on the four servers

- Perhaps it primes calculation takes place on the same CPU blocks that do not reflect the overall processor performance

Testing the Disk Performance

Checking the disk subsystem is performed in three steps:

- To prepare (generate) a set of text files

- Perform testing, remove the indicators

- Clean up trash

Preparing Text Files:

$ sysbench --test=fileio --file-total-size=70G prepare

The team will create a set of files with a total size of 70 gigabytes. The size should significantly exceed the amount of RAM to the test result does not affect the operating system cache.

Test execution:

$ sysbench --test=fileio --file-total-size=70G --file-test-mode=rndrw --init-rng=on --max-time=300 --max-requests=0 run

The test will be done in random read mode (rndw) for 300 seconds, after which the results will be shown. Again, the default test will be executed in a single thread (number of threads: 1).

Clean temporary files:

$ sysbench --test=fileio cleanup

An example of the result of the test:

Operations performed: 249517 Read, 166344 Write, 532224 Other = 948085

Read 3.8073Gb Written 2.5382Gb Total transferred 6.3455Gb (21.659Mb/sec)

1386.18 Requests/sec executed

Test execution summary:

total time: 300.0045s

total number of events: 415861

total time taken by event execution: 178.9646

per-request statistics:

min: 0.00ms

avg: 0.43ms

max: 205.67ms

approx. 95 percentile: 0.16ms

Threads fairness:

events (avg/stddev): 415861.0000/0.00

execution time (avg/stddev): 178.9646/0.00As a measure of performance of the disk subsystem, you can use the value of average data rate (in this example 21.659Mb / sec).

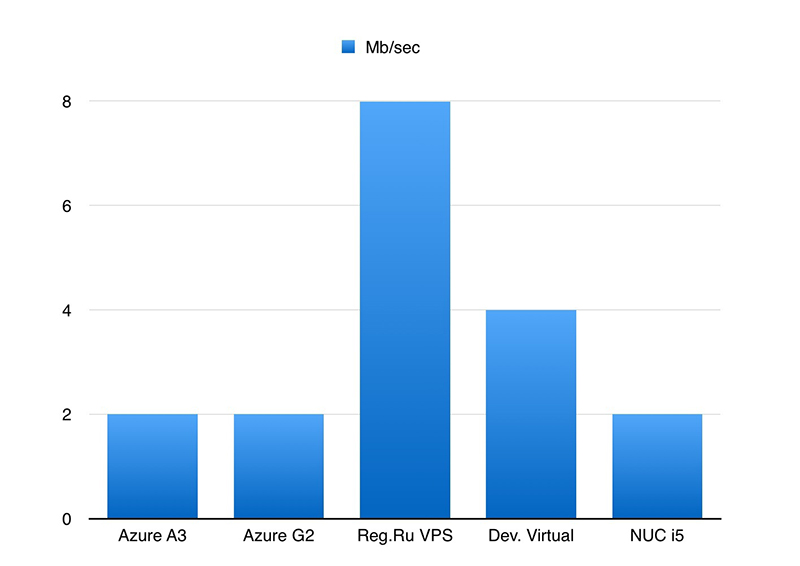

Let's see what this test is shown on my servers:

Notes:

- Conspicuous suspiciously low values of speed on all tested servers

- On NUC i5 installed SSD drive, no matter how many times I ran the test, the value of the data rate has always been in the range of 1.5 to 2 Mb/sec

MySQL OLTP Performance Test

The test checks the speed of the MySQL server transactions, each transaction consists of both read requests and write.

It is very convenient to change the server settings in the my.cnf, restart it and drive out a series of tests - immediately clear how the performance.

Preparing for the test:

$ sysbench --test=oltp --oltp-table-size=1000000 --mysql-db=test --mysql-user=root --mysql-password=pass prepare

Test run:

$ sysbench --test=oltp --oltp-table-size=1000000 --mysql-db=test --mysql-user=root --mysql-password=pass --max-time=60 --oltp-read-only=off --max-requests=0 --num-threads=8 run

The parameter --oltp-read-only can be set to on. Then only will be executed read requests, allowing the database to estimate the speed mode, for example, slave-base.

The result of the test:

OLTP test statistics:

queries performed:

read: 564158

write: 0

other: 80594

total: 644752

transactions: 40297 (671.57 per sec.)

deadlocks: 0 (0.00 per sec.)

read/write requests: 564158 (9402.01 per sec.)

other operations: 80594 (1343.14 per sec.)

Test execution summary:

total time: 60.0040s

total number of events: 40297

total time taken by event execution: 479.8413

per-request statistics:

min: 1.14ms

avg: 11.91ms

max: 70.93ms

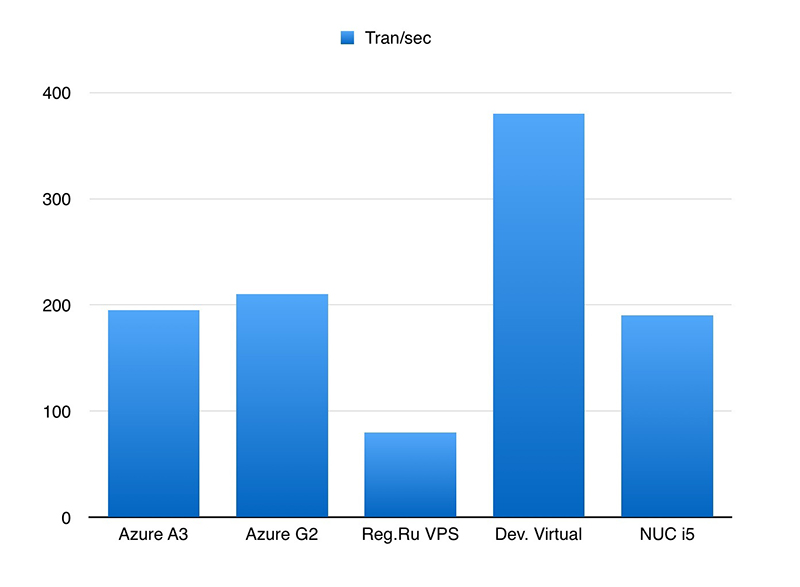

approx. 95 percentile: 15.54msThe most interesting option in the report is the number of transactions per second (transactions per sec).

As this test showed himself on the servers:

Notes:

- The MySQL configuration was the same in all servers

- The lack of significant differences between the A3 and G2 servers is surprising

- NUC i5 compared to G2

How to measure the performance of PostgreSQL?

Unfortunately, sysbench tool has built-in tools for PostgreSQL testing. But this does not stop us entirely, because you can use the utility pgbench.

Benchmark default scenarios repeatedly executes the following transaction:

BEGIN; UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid; SELECT abalance FROM pgbench_accounts WHERE aid = :aid; UPDATE pgbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid; UPDATE pgbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid; INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP); END;

To create a test case run:

$ pgbench -h localhost -U test_user -i -s 100 test

We carry out tests:

$ pgbench -h localhost -U test_user -t 5000 -c 4 -j 4 test

The Keys command means that the client will perform 4 5000 4 transactions per thread. As a result, 20,000 transactions will be executed.

Result:

starting vacuum...end. transaction type: TPC-B (sort of) scaling factor: 10 query mode: simple number of clients: 4 number of threads: 4 number of transactions per client: 5000 number of transactions actually processed: 20000/20000 latency average: 0.000 ms tps = 3350.950958 (including connections establishing) tps = 3357.677756 (excluding connections establishing)

The most important thing here: it's tps.

Comparative tests on different servers, unfortunately, no.

What About the Performance of PHP?

With sysbench and plenty of kilowatts of power to the CPU of many servers, we have learned very quickly and adequately how to assess the performance of the server. Consequently it allowed us to provide an expert forecast of the performance of Web applications on that server.

However, there are situations when sysbench showed a good result, and the PHP application on the server demonstrates quite mediocre performance metrics.

Of course, the result is influenced by parameters such as:

- PHP version

- The presence of the accelerator

- How and what it is compiled PHP

- Which extensions are enabled

I would love to have a tool that would be easy to install and after you start gave clear performance metrics of the current PHP on the server.

And like that this tool does not affect the performance of the disk subsystem (or network), measure only the work of the PHP interpreter on a bunch of CPU and memory.

A simple reflection has led to the conclusion that:

- Existing instruments are still there

- It is possible to pick suitable algorithm

- As long as there is immutability in the algorithm, we can compare the obtained results

I have found a script that can be used for that. The script is compiled as phar file, which significantly make it easy to download and run on any server. The lowest version of PHP to run is 5.4.

For start:

$ wget github.com/florinsky/af-php-bench/raw/master/build/phpbm.phar $ php phpbm.phar

The script performs ten tests, divided into three groups:

- The first group is of common operations (cycles, rand, creation / deletion of objects)

- The second group is of tests: verifies string functions, implode / explode, calculating hashes

- The third is to work with arrays

- All measurements are made in seconds

On the implementation of the test report:

[GENERAL] 1/10 Cycles (if, while, do) ...................... 6.72s 2/10 Generate Random Numbers ..................... 3.21s 3/10 Objects ..................................... 4.82s Time: .. 14.76 [STRINGS] 4/10 Simple Strings Functions ................... 13.09s 5/10 Explode/Implode ............................ 15.90s 6/10 Long Strings ............................... 30.37s 7/10 String Hash ................................ 23.57s Time: .. 82.93 [ARRAYS] 8/10 Fill arrays ................................ 22.32s 9/10 Array Sort (Integer Keys and Values) ....... 17.17s 10/10 Array Sort (String Keys and Values) ........ 14.29s Time: .. 53.79 TOTAL TIME: . 151.47

The script allows you to not only assess the overall performance of PHP on the server (total time), but also to see what is slower. Repeatedly I saw mediocre overall result came just for one test: somewhere it can be a slow operation of the random number generator, and in some cases work with long strings.

Conclusion

I would like to add that the above tools allow us to estimate the server performance only at the time of measurement. It should be understood that the server can affect processes running in parallel. And if your tests shown good results, it does not mean that it will always be like that.

In particular, this problem manifests itself when you analyze the client's server, which is already in full operation. You do not know what the cron tasks do, which processes are waiting for their task processing events, if they run long gzip/tar archive processing taks, if they are working with antivirus or spam filters, and many other types of tasks that could be unknown to you.

To analyze the server behavior atop and iostat can helps. If we process the statistics for a few days (or longer), and you can view almost everything going on.

atop

Writing data to a file:

$ atop -w /tmp/atop.raw 1 60

Read the record:

$ atop -r /tmp/atop.raw

iostat

Measuring CPU usage:

$ iostat -c 1

Conclusion:

%user %nice %system %iowait %steal %idle 82.21 0.00 17.79 0.00 0.00 0.00 79.05 0.00 20.70 0.00 0.00 0.25 80.95 0.00 19.05 0.00 0.00 0.00 80.95 0.00 19.05 0.00 0.00 0.00 80.85 0.00 18.91 0.25 0.00 0.00 ...

Metering boot disk subsystem:

$ iostat -xd /dev/sda 1

Conclusion:

rkB/s wkB/s await r_await w_await svctm %util 0.00 2060.00 4.05 0.00 4.05 3.98 95.60 0.00 2000.00 3.97 0.00 3.97 3.95 96.40 0.00 1976.00 3.92 0.00 3.92 3.92 95.60 0.00 2008.00 3.95 0.00 3.95 3.93 96.00 0.00 2008.00 3.92 0.00 3.92 3.92 96.80 0.00 2020.00 4.03 0.00 4.03 4.00 97.60 0.00 2016.00 3.97 0.00 3.97 3.97 97.20 ...

And, of course, you can use Munin or similar programs to collect statistics from a server for a long time.

If you liked this article, share it with your colleague developers. If you have questions about performance evaluating and tuning, post a comment here.

You need to be a registered user or login to post a comment

1,616,872 PHP developers registered to the PHP Classes site.

Be One of Us!

Login Immediately with your account on:

Comments:

1. About your article - Christian Vigh (2017-01-27 17:58)

About your article... - 0 replies

Read the whole comment and replies