Author: Joseluis Laso

Viewers: 2,469

Last month viewers: 6

Package: PHP AWS S3 SDK Wrapper

Read this article to learn how to easily use Amazon AWS S3 API from PHP to store your files using caching to access the files faster and reducing the service costs.

Contents

What is Amazon S3?

Get an Account to Access AWS

Create Our First File Bucket

What is the Main Purpose To Have Files on a S3 Bucket?

Why using Web Services and Not a Simple FTP Access to do this?

A Real Example

Using the PHP AWS S3 Wrapper class

Online Demo

Conclusion

What is Amazon S3?

For those not yet familiar with this service, Amazon S3 stands for Amazon Simple Storage Service. It is a service for storing and serving files that can be accessed via Amazon Web Services API, AWS.

Get an Account to Access AWS

First you need an account to access AWS. This step is really simple. Go to the registration page to get a free account. I have to say that the first time you get an Amazon account you have one year of limited free service.

If you have an AWS account you can go to the next section. Please note that the free account has limited the access to the services. At the time this article is being written, S3 space is limited to 5GB and requests are limited to 20000 reads and 2000 writes.

Create Our First File Bucket

A bucket is basically a container for files and directories. The process to create a bucket is really simple. Click on the "Create bucket" button on the left side and on the popup dialog select a unique bucket name and a region. We can have several buckets. Each bucket exists in a physical location (region).

The bucket name must to be unique inside on the whole S3 system but is not important as the folders you can create later. Think that the bucket name is necessary only for identification purposes. You have to select the region close to your users in order to minimizing the delays serving your data requests.

What is the Main Purpose To Have Files on a S3 Bucket?

You can use S3 buckets for many purposes. This is not an extensive list of things that we can use S3 buckets for:

A place to automatically save backup data

A place where clients can queue lists of tasks to process

A place to store processed data of clients

To implement a collaborative online editor

To have independence and scalability

Etcetera

Why using Web Services and Not a Simple FTP Access to do this?

A Real Example

Let's say that we need a service from another company, for example a service that processes the data we put in one JSON file. Another company processes the data and returns another file as result.

Let's say that we need that the folder from which the other company reads the data file, only has permission to read for the that company user. The company that writes the results has both read and write permissions.

The only thing we have to do is provide to our internal department, the one that generates the JSON data file, this class to access AWS S3 bucket, as well the AWS key and secret password to access it.

We will do the same for the external company. We will give them the PHP class. They have to have their user account to access AWS S3. We will only give to this user the permissions to write in our bucket. Actually they can be the owners of the the bucket.

Once a day (or with the agreed frequency) we will look for the new files on the input bucket and we will process it, maybe letting it available to our final users, or creating a report or chart as results. The AWS SDK provides hooks in order to automate processing task on new files.

Obviously this same control flow could be done access the files via FTP for instance. Think that AWS S3 allows you to create more buckets in other places of the world. Scale this system according with your real needs. It can be backed up periodically. And the best, you can determine the cost of using the service with great accuracy.

With a FTP server, you have to know in advance the permissions and disk space size on the server that you need in order to provide a reliable service to your users. With AWS S3 you can provide a reliable service easily with a minimal effort.

Using the PHP AWS S3 Wrapper class

Accessing AWS S3 is really easy from PHP. The only thing that seems to be missing is a cache system in order to minimize the accesses to S3 to reduce the costs and improve the user experience.

This class is a wrapper that encapsulates all the methods that you are going to need to access S3 in a simple way, and having read access improved with local caching support.

The class can be added to your projects very easily. You can use it in two ways: one automatic that needs a small configuration file and other manual in which you have to invoke the constructor explicitly indicating S3 access keys.

Let's say that we have a file in S3 called text.txt in the root of the bucket my-test-bucket. The access keys for S3 are access_key=1234 and secret_access_key=5678. Let'ís see how to use both ways, the automatic and the manual one.

Automatic

Prepare a config.ini file inside src folder of the project. You can copy the sample file config.ini.sample that is in the same folder of the class.

src/config.ini

[s3]

bucket=my-test-bucket

access_key_id=1234

secret_access_key=5678

example.php

$cache = __DIR__ . "/../cache/";

$s3 = \JLaso\S3Wrapper\S3Wrapper :: getInstance();

$file = $s3->getFileIfNewest( $cache."test.txt", "/test.txt");

$content = file_get_contents( $file );

Manual

example.php

$cache = __DIR__ . "/../cache/";

$s3 = new \JLaso\S3Wrapper\S3Wrapper( "1234", "5678", "my-test-bucket");

$file = $s3->getFileIfNewest( $cache . "test.txt", "/test.txt");

$content = file_get_contents( $file );

Basically the class proposed simply encapsulates calls to the official PHP SDK:

public function getFilesList( $path = "" )

{

$files = array();

$options = array(

'Bucket' => $this->bucket,

);

if ($path){

$options['Prefix'] = $path;

$options['Delimiter'] = '/';

}

$iterator = $this->s3Client->getIterator( 'ListObjects', $options );

foreach ($iterator as $object) {

$files[] = array(

'timestamp' => date("U", strtotime( $object[ 'LastModified' ] )),

'filename' => $object['Key'],

);

}

return $files;

}

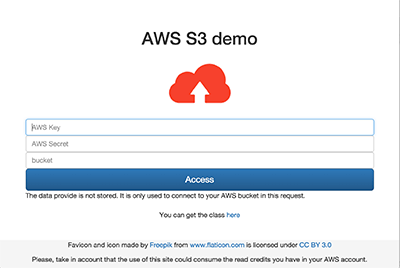

Online Demo

There is an online live example of this package running, which is very simple. It asks first for the AWS keys to access it as yourself to your bucket. Then it presents a list of your files on the selected bucket. Finally you can see the content of the file selected. Pretty easy.

You can see the online demo here.

Conclusion

As you may see accessing the AWS S3 service to store and retrieve files is very easy. However when you use the PHP AWS S3 Wrapper class, you benefit from caching support, so it can be even faster and reduce your cost accessing AWS S3.

If you liked this article or you have a question regarding accessing the AWS S3 service, post a comment here.

You need to be a registered user or login to post a comment

Login Immediately with your account on:

Comments:

No comments were submitted yet.